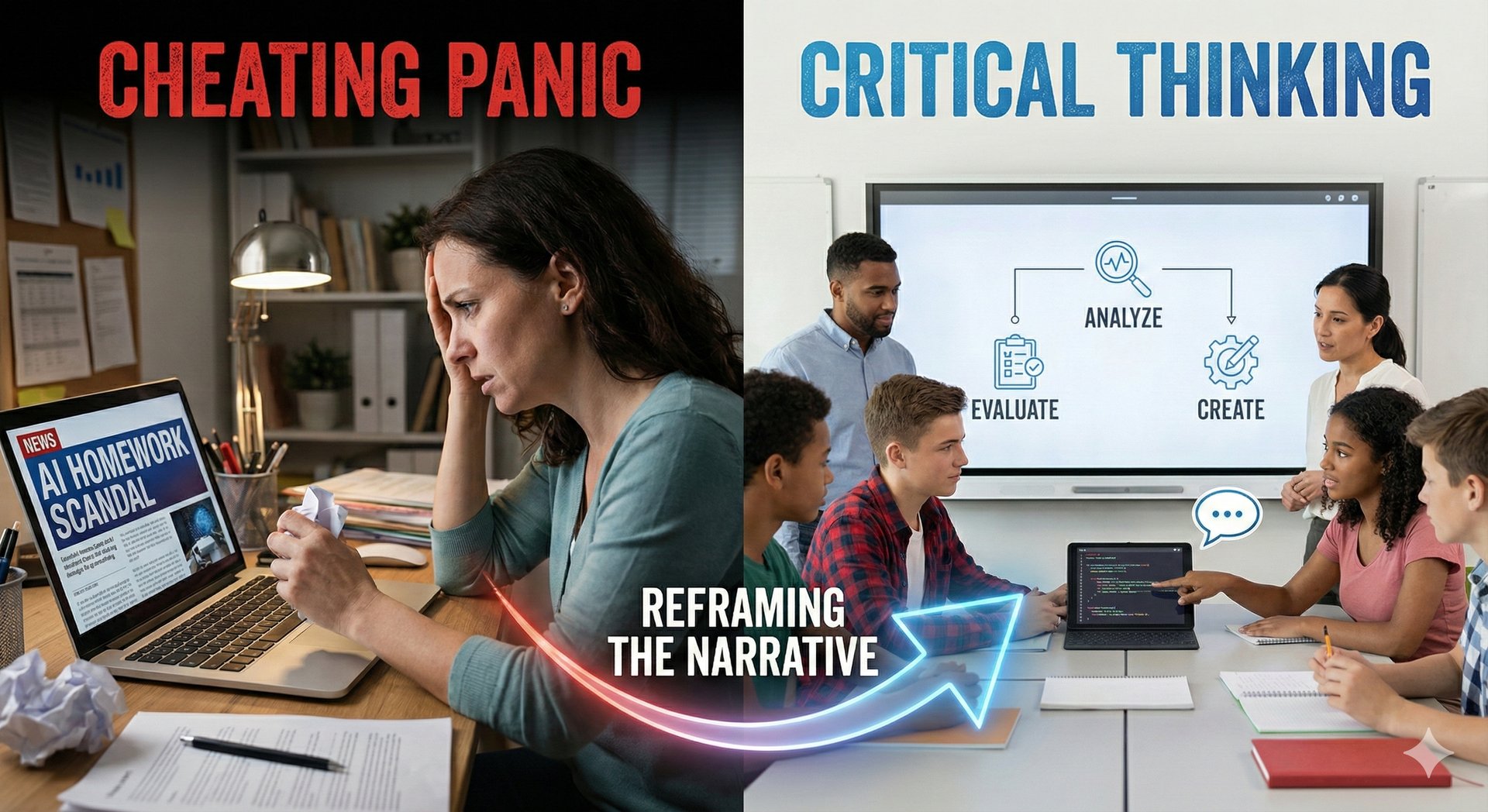

From "Cheating Panic" to "Critical Thinking": How to Reframe AI for Your Parent Community

The headlines are scary, and your inbox is likely full of concerned emails. Here is how school leaders can shift the parent narrative around Generative AI from fear to future-readiness.

EDUCATION

ParentEd AI Academy Staff

11/27/20254 min read

Picture your Monday morning inbox. Amidst bus route complaints and staffing issues, you notice a growing trend in subject lines: "ChatGPT concerns," "Is my child's classmate a robot?" and "What is the school doing about AI cheating?"

If this sounds familiar, you are not alone. Since the public release of tools like ChatGPT, a wave of "AI anxiety" has washed over parent communities. It’s a predictable reaction. For decades, the model of education many parents recognize has been tied to independent output: the take-home essay, the research paper, the problem set.

When a machine can generate those outputs in seconds, it feels like the floor has dropped out of academic integrity.

As school leaders and educators, our instinct might be to double down on plagiarism policies or invest in dubious AI-detection software. But reactive measures only reinforce the dominant narrative: AI is a weapon for cheating.

To prepare our students for the reality they will graduate into, we must proactively change that narrative. We need to help parents see AI not just as a threat to integrity, but as a catalyst for a higher form of human learning.

Here is how to move your community from panic to partnership.

1. Validate the Fear (Don't Dismiss It)

The first step in effective communication is empathy. Parents are panic-stricken not because they are anti-technology, but because they care deeply about their children's future success and character development. They worry that if a machine does the thinking, their child’s brain will atrophy.

Do not dismiss these concerns as Luddite reactions. Acknowledge them openly in your communications. Yes, academic dishonesty is a real risk. Yes, relying on AI for answers without understanding is detrimental to learning.

By validating their fears, you earn the trust needed to introduce a new perspective. You are signaling: We see the same risks you do, and we are managing them.

2. The Pivot: From "Answer-Getting" to "Question-Asking"

Once you have validated the concerns, you must articulate the educational pivot. The primary value of education is shifting away from rote memorization and basic information synthesis—tasks AI excels at—toward uniquely human skills.

We need to explain to parents that in an AI world, the quality of the answer you get depends entirely on the quality of the question you ask (prompt engineering).

How to frame it for parents:

Old Model: We grade students on their ability to retrieve information and assemble it into a basic essay.

New Model: We grade students on their ability to critique, refine, verify, and iterate on information—sometimes using AI as a starting point or a "sparring partner" for ideas.

Share concrete examples of changed assignments. Instead of asking students to "Write a 500-word summary of the Civil War," explain that teachers might now ask students to "Generate a summary of the Civil War using AI, identify three significant biases or omissions in its response, and rewrite it with verified citations."

The focus shifts from the product to the process of evaluation.

3. Doubling Down on Critical Thinking (The New "Basics")

The strongest antidote to "cheating panic" is a robust "critical thinking" offense.

Parents need to understand that AI tools are notoriously confident liars. They hallucinate facts and perpetuate societal biases. Therefore, skepticism is now a fundamental literacy skill.

Position AI literacy not as "learning to use the cool new tech," but as essential 21st-century civic and professional readiness. The World Economic Forum’s Future of Jobs Report 2023 highlights that "creative thinking" and "analytical thinking" are the top skills deemed essential for workers in the coming years. AI doesn't replace these skills; it makes them more valuable than ever.

We must communicate that by integrating AI thoughtfully, we are teaching students to:

Detect Bias: Whose voices are missing from the AI's output?

Verify Facts: AI is a drafting tool, not an oracle. Students must act as the human "editor-in-chief."

Navigating Ethics: Just because you can generate something, does it mean you should?

When parents understand that the school is teaching their children to be the boss of the algorithm, rather than its servant, the fear of cheating begins to recede.

4. Actionable Communication Strategies

How do you take this message to your community? Don't wait for the next angry email.

The "Future-Ready" Town Hall: Host an evening session dedicated to AI. Don't just talk at parents; show them. Do a live demo of ChatGPT making a factual error, and then model how a teacher would use that moment for instruction.

Showcase Student Work: In your newsletters, highlight examples of assignments where students used AI responsibly to enhance critical thinking, rather than replace it. Show the "rough drafts" and the "prompt history" alongside the final product.

Clear AI Guidelines (Not Just Bans): Publish clear, accessible guidelines on what constitutes responsible vs. irresponsible AI use at different grade levels. UNESCO emphasizes the need for "human-centered" approaches to AI in education, prioritizing human agency and oversight. Your policies should reflect this balance of opportunity and guardrails.

Leading the Conversation

If schools remain silent on AI, the media and anxious neighbor-chatter will fill the void. The narrative will remain stuck on cheating.

By proactively reframing AI as a powerful tool that requires heightened critical thinking, ethical reasoning, and human judgment, you can turn down the heat. You can help your parent community see that you aren't just "managing tech"—you are preparing their children to lead in a complex future.

For Further Reading & Framing: